<< Previous Edition: Implications of Presidential AI Executive Order

Recently, the Mozilla Foundation made a strong case for opening up Large Language Models (LLMs) to the world. They believe that by doing so, we can make these AI models more transparent, accountable, and encourage everyone to chip in and make them better. This idea got me thinking about the different shades of 'open' in the tech world, and how each comes with its own set of goodies and gotchas.

Shades of Open

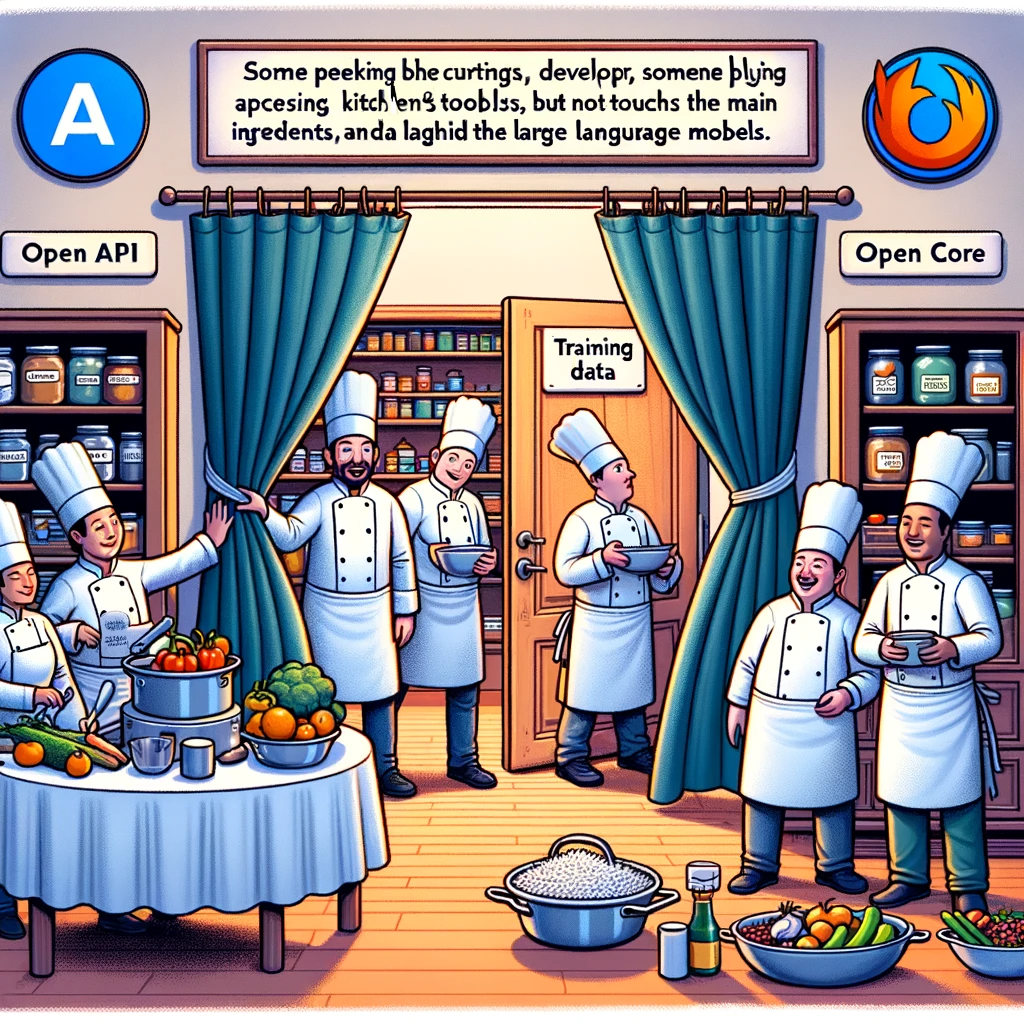

When we talk about opening up AI, we're not talking about a one-size-fits-all kind of deal. There are different levels to this openness: open API, open core, and completely open source. Each has its own flavor and brings something different to the table.

Open API is like being given a peek behind the curtain. Developers can interact with the LLMs, but they can’t change what’s behind the curtain. It’s a start, but the real magic lies in being able to tweak and tune the model.

Open Core takes things a step further. Here, developers can play around with parts of the model, but the heart of it remains locked away. It’s a nice balance, but it still keeps a leash on community-driven improvements.

Completely Open Source is where the gates are flung wide open. Anyone can jump in, tweak the model, share it, and improve it. It’s a playground for innovation, but it comes with its own set of challenges like handling sensitive data and the big computer power needed to train these models.

Opening Up the AI Kitchen: Ready to See How the Sausage is Made?

The idea of open-source is exciting. It’s like inviting everyone into the kitchen to cook together. But with LLMs, it's not just about sharing the recipe but also the ingredients - the training data. And here’s where things get sticky. Are we ready to lay it all bare? Is the community ready to handle the not-so-nice parts of the training data?

Sharing the training data is a tricky business. It’s like opening up the pantry for everyone to see. It promotes honesty and teamwork, but it can also unveil some ingredients we’d rather keep hidden. And if we decide to clean up the pantry, will the dish still taste as good?

The chatter about open-source usually circles around the code and algorithms. But with LLMs, we’re diving deeper, talking about sharing the training data too. This could push the open-source movement to new heights or reveal challenges we need to tackle, making the conversation around open-source in AI richer and way more interesting.

Conclusion

Opening up LLMs completely comes with its fair share of hurdles. But the bigger picture shows a promising landscape. It’s like inviting fresh eyes and hands to contribute, aligning with the Mozilla Foundation’s call for a more open and friendly AI neighborhood. The conversation sparked by the Mozilla Foundation, mixed with a deeper look into the shades of open, lays down a path towards a more welcoming and inventive AI world.