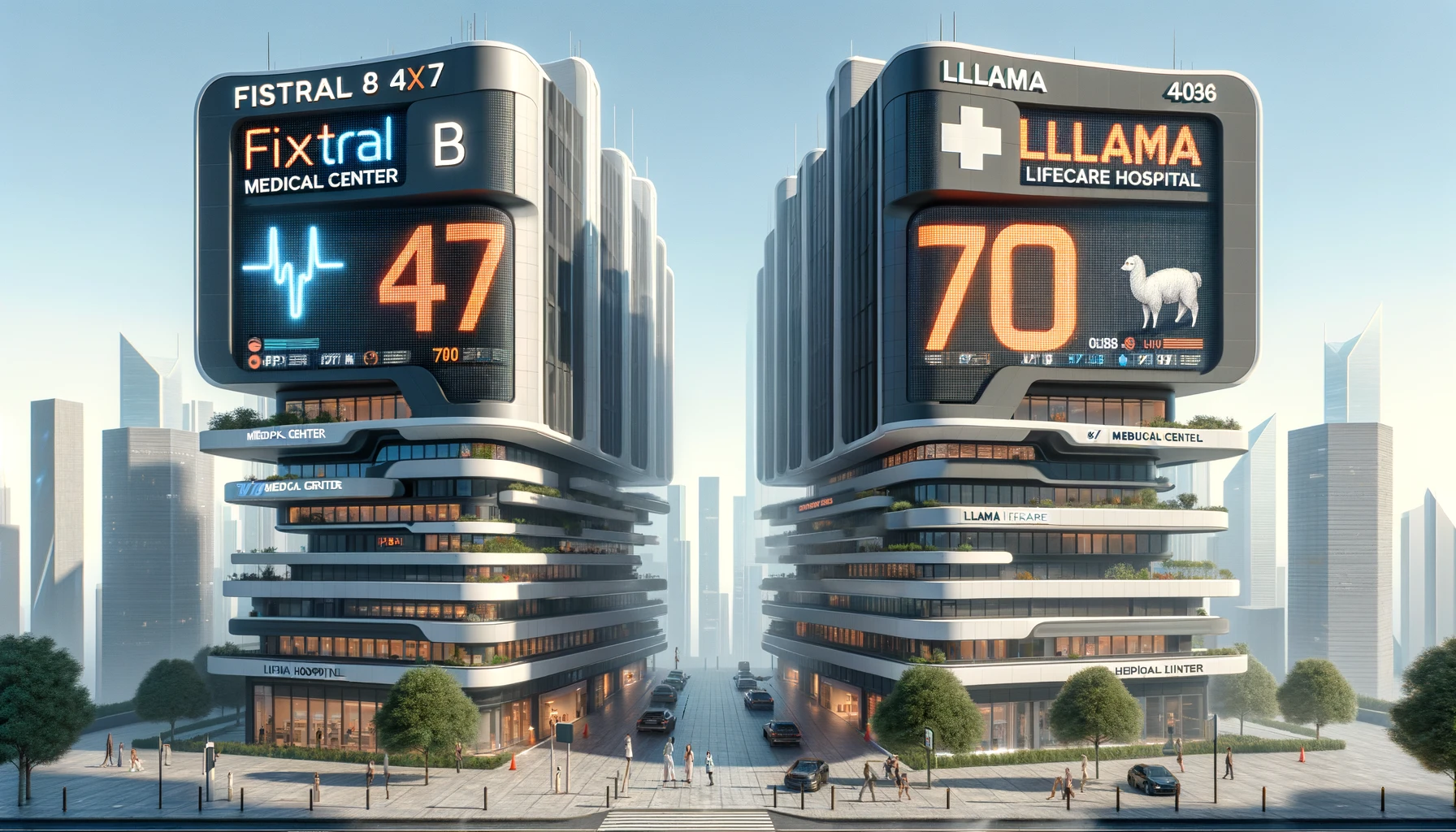

Fixtral 8x7 Medical Center is quickly establishing itself as a leader in healthcare, celebrated for its exceptional services and its distinctive, focused 8-hour daily operation, hence its name. At the core of Fixtral 8x7's innovative approach is Dr. Jones, the 'router'. His crucial role is to guide each patient's care journey, directing them to the most fitting experts. This clinic is equipped with eight specialists, each utilizing 7 billion parameters to test and evaluate patients.

Despite the collective parameter count reaching a staggering 47 billion due to overlaps, Fixtral 8x7's efficient system ensures that a typical patient, referred to two specialists, benefits from a focused examination involving 13 billion parameters. This precision underscores Fixtral’s commitment to targeted and effective patient care.

On the other hand, LLAMA Lifecare Hospital takes a more comprehensive approach by evaluating patients using a vast array of 70 billion parameters. However, a study sponsored by Fixtral 8x7 suggests that this extensive method may not necessarily result in improved patient outcomes. Moreover, it takes five times longer, thus emphasizing the effectiveness of Fixtral's focused and strategic approach.

Mixtral outperforms or matches Llama 2 70B performance on almost all popular benchmarks while using 5x fewer active parameters during inference.

A Patient's Journey

Emma enters with a mix of symptoms: a persistent cough and occasional breathlessness. Dr. Jones, akin to a router processing an input token, meticulously assesses these symptoms to determine the need for specialist consultation.

Dr. Jones refers Emma to two specialists: Dr. Clarke, a Pulmonologist, and Dr. Lee, an Allergist. Each specialist's examination, rooted in their 7 billion parameters, offers a thorough analysis of Emma's condition. Dr. Clarke's focus on respiratory health and Dr. Lee's allergen testing exemplify a parallel process, mirroring the efficient and precise expert selection in SMoE.

After the consultations, Dr. Jones integrates the findings from Dr. Clarke and Dr. Lee. This process mirrors the 'weighted sum' in SMoE, where outputs from different experts are combined to form a cohesive understanding. In Emma's case, the integration balances the insights from the Pulmonologist and Allergist, weighting each according to its relevance to her symptoms. This method ensures that the final diagnosis is comprehensive, reflecting the collective expertise of the specialists, much like how SMoE model synthesizes information from various parameters to generate an accurate response.

Conclusion

I hope you found this allegory both enjoyable and enlightening, especially in understanding the significant value proposition of Mixtral 8x7. Mixtral is certainly making notable progress in enhancing model performance. Additionally, the adoption of the Apache 2.0 license is a positive step, offering more permissive usage and encouraging wider adoption.

From our lab's perspective, Mixtral shows a clear advantage over the Llama model, indicating its robust capabilities. However, it's important to recognize that Mixtral still has some ground to cover in its quest to rival the leading models in the field, such as GPT-4 and the Google Vertex/Gemini family of models. This journey reflects the ever-evolving nature of Generative AI technologies and the continuous push towards more advanced and efficient models.