<< Previous Edition: Pipe dream of running inference on CPUs

We often anthropomorphize the collective operations of generative AI systems, labeling them as generative agents and foundation agents. However, at the core of these collective behaviors are neurons. Let's dive into understanding the critical role and journey of a neuron within the system.

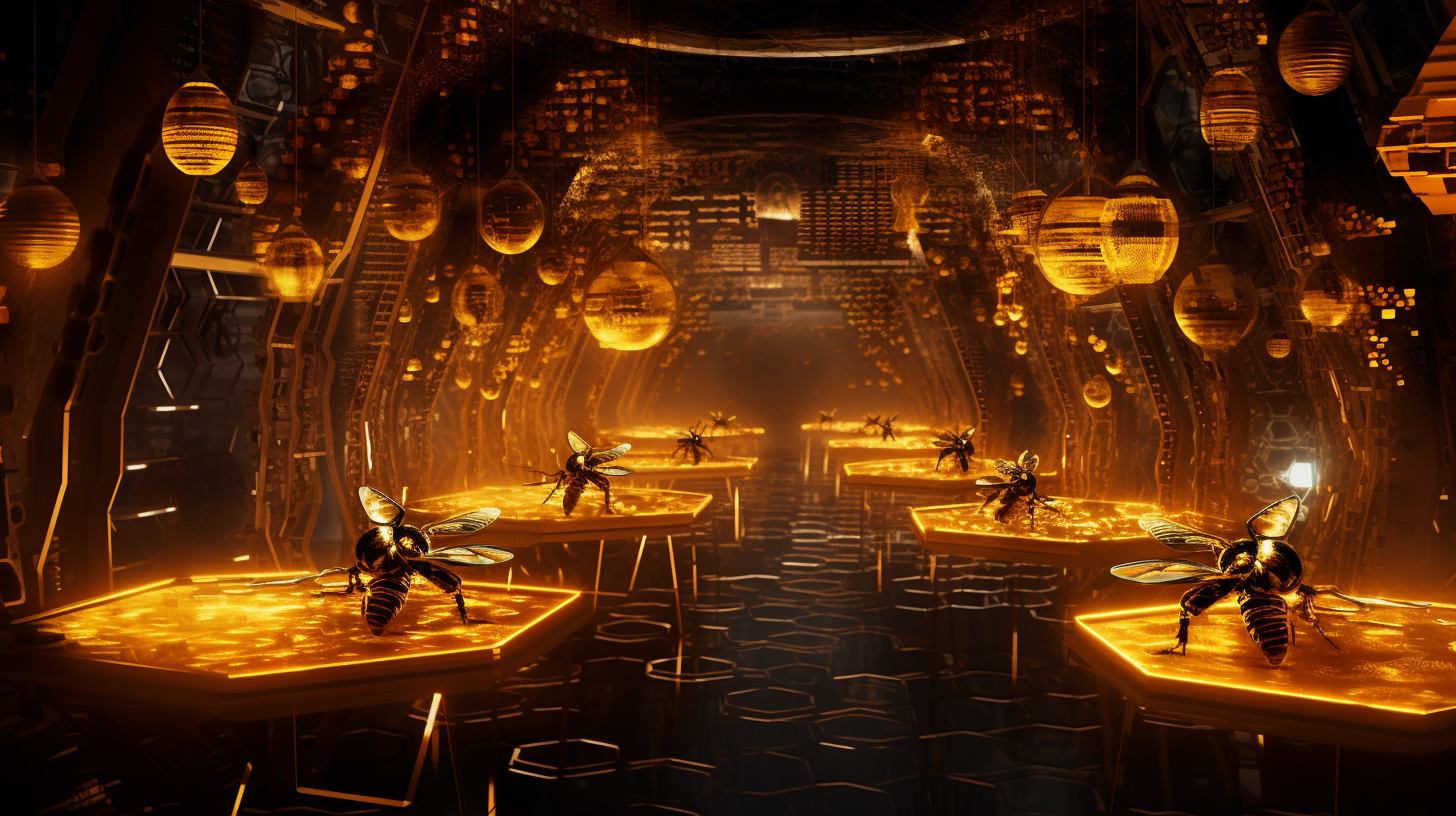

Consider a neuron as the diligent worker bee within the AI hive. It's important to acknowledge that in this hive, every worker bee not only aids the collective but also carries distinct characteristics. This uniqueness shines through in the way each neuron handles inputs from its surroundings and fellow neurons, as well as in how it produces outputs.

Neurons: The Whimsical Wizards of the AI Hive

Each input to a neuron comes with an associated weight, serving as a measure of that input's significance. To simplify, one might imagine inputs (x) and their weights as scalars in the linear equation (y = f(wx + b)). Yet, in the realm of neural networks, these elements typically expand into vectors, matrices, or even tensors. This complexity allows the network to assign varying "trust levels" to inputs, whether they originate from external data sources or the outputs of other neurons within the network. Such a system ensures that each neuron's response is uniquely tailored based on the weighted importance of its received inputs.

Beyond merely tallying inputs and their weights, the neural network incorporates each neuron's inherent biases into the decision-making process. These biases effectively color the neuron's perception of its inputs, akin to an individual's subjective viewpoint influencing their interpretation of information. The summation of weighted inputs is adjusted by this bias, a step which might be seen as either introducing or compensating for predispositions, depending on one's perspective. This adjusted sum then undergoes transformation via an activation function, which determines the neuron's output and its contribution to the network's subsequent layer.

This nuanced interplay between inputs, weights, biases, and activation functions underpins the neural network's ability to learn and make decisions. By analogizing neurons to worker bees within a hive, each with its own "mind" and predispositions, we gain a vivid picture of how individual components within a neural network collaborate and compete. The activation function serves as the final arbiter of each neuron's output, ensuring that the collective behavior of these neural "worker bees" leads to intelligent, informed responses to complex problems.

Conclusion

Our journey through the world of Large Language Models (LLMs) often embraces a broader perspective, so taking a moment to shift towards a more granular viewpoint can be enlightening. In this discussion, we've peeled back the layers to examine the fundamental mechanisms of input and output processing within a neuron. Moving forward, we'll gradually expand our exploration to uncover how these diligent worker bees navigate the processing of intricate and even multi-modal information. Stay tuned for more insights in our upcoming newsletters.