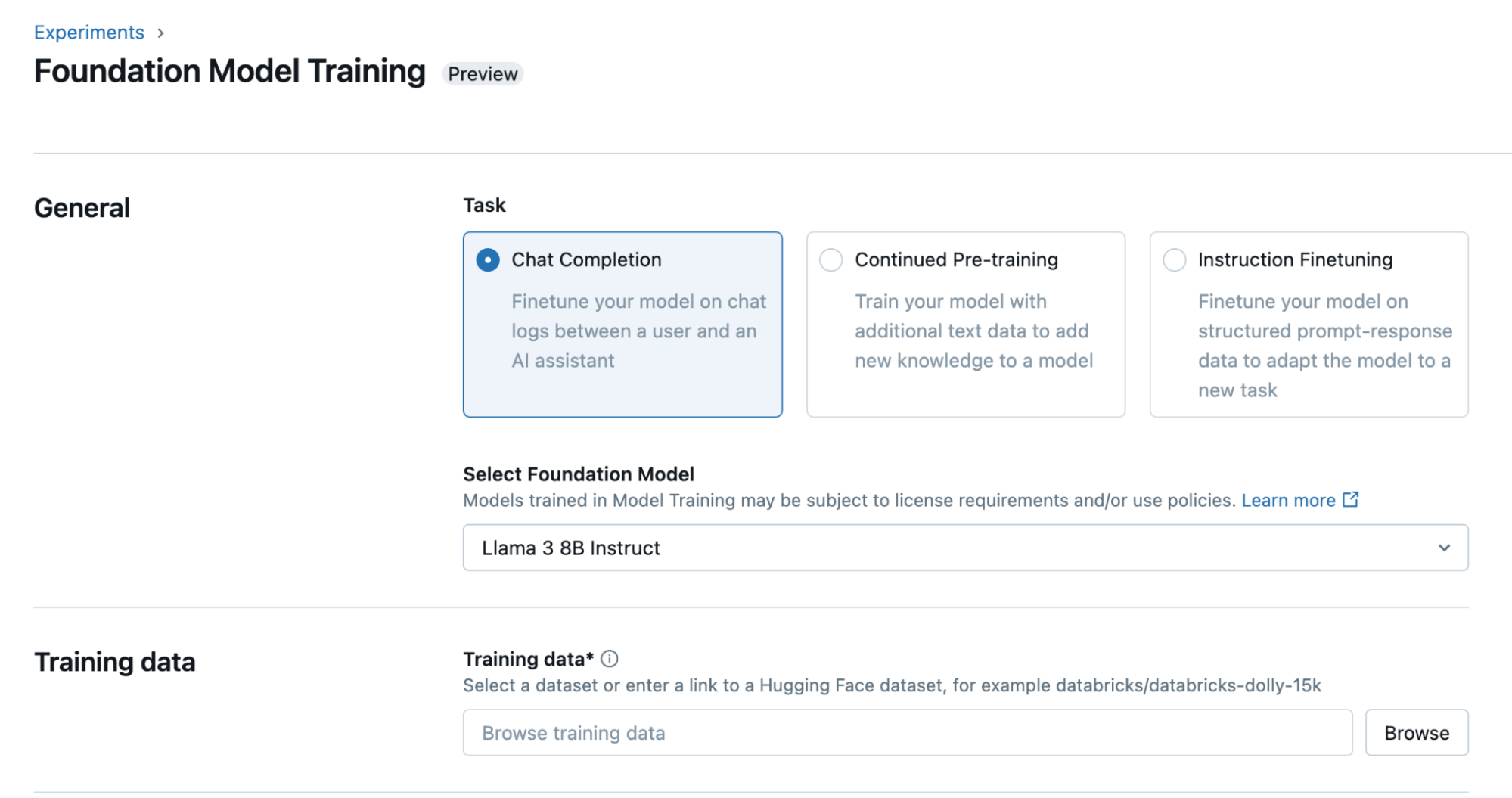

As I was going through Mosaic AI's announcement this week about "Mosaic AI: Build and deploy production-quality Compound AI Systems", I noticed an interesting detail under the Foundational Model Training section:

Image Copyright Databricks

Image Copyright DatabricksUsers only have to select a task and base model and provide training data (as a Delta table or a .jsonl file) to get a fully fine-tuned model that they own for their specialized task

This piqued my interest as my knowledge of Data Lakes had become somewhat outdated due to my recent focus on containers and AI (or so I believed). I sensed I might have missed significant developments in recent years. To recap, in my prior encounters with Data Lakes, there were two primary file format standards in competition, namely Parquet and JSON, with Avro trailing as a distant third. The reference to a .jsonl file in the announcement appeared to be a JSON variation, yet the Delta table format remained unfamiliar territory for me.

The More Things Change, the More They Stay the Same

Did I really miss too much, or are both these formats simply the same foundation with a new, more advanced structure? Upon further investigation, I realized that the Delta table format and .jsonl files are indeed evolutions of the Parquet and JSON formats I was familiar with.

Revisiting Parquet

Parquet is a columnar storage format that offers efficient compression and faster query performance by storing each column separately. This format reduces storage space and allows systems to read only the required columns for a query, speeding up execution. Parquet employs encoding techniques to optimize storage and minimize redundancy. Dictionary encoding stores unique values in a dictionary and replaces occurrences with their index, which is particularly effective for columns with a limited number of distinct values. Run-length encoding, on the other hand, compresses sequential repeated values by storing only the value and the number of repetitions.

Moreover, Parquet maintains statistics for each column, such as minimum and maximum values. These statistics enable data skipping during query execution. When a query's filter conditions don't match the stored statistics for a column, Parquet can skip reading that entire column altogether. For example, if a query is searching for values greater than 100, but the maximum value stored in the column statistics is 50, Parquet can safely skip reading that column.

From Parquet to Delta Table

Delta Table, developed by Databricks, builds upon the Parquet format by introducing a transaction log, which brings several advanced capabilities to the table. This transaction log, recorded in JSON format, captures every data operation such as inserts, updates, and deletes, enabling features like ACID transactions, schema enforcement, and time travel.

The concept of a transaction log is similar to change logs used in Hadoop and Kafka. In Hadoop, change logs (edit logs) record metadata changes to ensure the consistency of the HDFS namespace. Similarly, Kafka change logs track state changes for stream processing, facilitating replication and recovery. However, the Delta Table transaction log differs by also capturing detailed metadata and data changes, allowing for robust data management, consistency, and historical data access in a way that supports complex data operations and real-time updates.

From JSON to JSONL

Similarly, the .jsonl file format is an evolution of the JSON format. JSONL (JSON Lines) is a format where each line in a file is a valid JSON object. This format simplifies the processing of large datasets by allowing incremental reading and writing, enabling individual lines to be processed without loading the entire file into memory. This capability is particularly beneficial for handling log data and for tasks such as model training, where data needs to be fed to a model in smaller, manageable batches.

Conclusion

In the rapidly evolving world of data and AI, it's easy to feel overwhelmed by the emergence of new technologies and formats. However, upon closer examination, it becomes clear that many of these advancements are built upon the solid foundations of existing standards and concepts.

The Delta Table format and .jsonl files, mentioned in Mosaic AI's announcement, are testament to this evolutionary process. Delta Table enhances the capabilities of the Parquet format by introducing a transaction log, enabling features like ACID transactions, schema enforcement, and time travel. Similarly, the .jsonl format extends the functionality of JSON by allowing for more efficient processing of large datasets.